The Serendipitome is a concept.

Serendipitome – is the total attributes and constitutive components making up the phenomenon known otherwise as serendipity.

Serendipity is the unforeseen encounter of a joyful, fortunate, or prosperous happenstance.

Serendipitome is the total of elements and circumstances in a person’s milieu that bring about serendipity encounters.

The serendipitome framework consists of a mind-set and skills set.

A high state of consciousness is required within the Serendipitome milieu. It’s a mental state of constant positive expectations.

Every apparent delay is considered a step stone forward.

The serendipity mindset holds that serendipity encounters are revealed during any person’s regular life as routine phenomena. It is the mind that expects serendipity encounters and perceives serendipitous opportune happenstances.

When a high mental state of serendipity expectations prevails, serendipitous encounters are perceived.

The required serendipity skill set consists of three components.

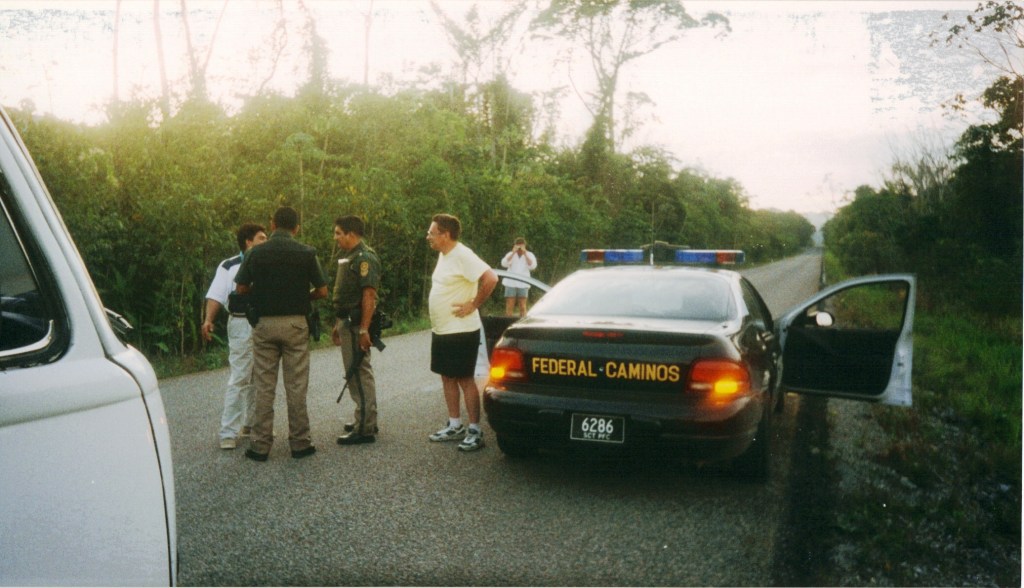

First is Sociability. This is the penchant for meeting and working with other people. The more people one meets and works with, the higher are the probabilities for random serendipity encounters.

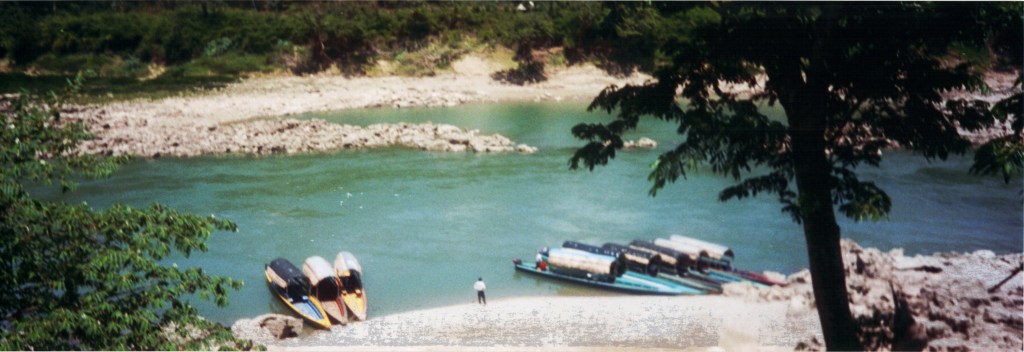

Second skill is Adventurism. The passion to Explore, meet unknown environments and continents that bring about more different breaks presenting unexpected prosperous happenings.

Third skill, Sagacity, is the cognitive talent to perceive the scattered dots in our life and mentally connect them to a meaningful flourishing experience.

Deep Dive into the Serendipitome: The Framework for Cultivating Fortunate Happenstances

Core Definition and Etymology.

The Serendipitome represents the holistic ecosystem of attributes, circumstances, and other constitutive elements that collectively generate the phenomenon known as serendipity. Coined as a portmanteau of “serendipity” and “-ome” (from genome or biome, denoting a complete system or totality), it encapsulates all internal and external factors in an individual’s life that orchestrate unforeseen, joyful, prosperous or other advantageous encounters.

Serendipity itself is derived from Horace Walpole’s 1754 reference to the Persian fairy tale The Three Princes of Serendip (where protagonists make accidental discoveries through sagacity). It is traditionally defined as “the occurrence of events by chance in a happy or beneficial way.”

The Serendipitome elevates this from mere “luck” to a structured, cultivable domain—not random chaos, but a dynamic interplay of mindset, skills, environment, and consciousness that amplifies the frequency and impact of such events.

In essence:

- Serendipity = The event (the fortunate happenstance).

- Serendipitome = The system (the milieu, mindset, and mechanisms that make the event probable and perceptible).

The Serendipitome Framework = Mindset + Skill-Set + Enabling Milieu.

The Serendipitome is not passive concept. It is an active framework requiring deliberate cultivation. It operates on two pillars—a mindset and a skill-set—embedded within the broader environmental milieu.

A heightened state of consciousness underpins both, transforming ordinary life into a fertile ground for serendipitous yields.

Consider the story of Aristotle Onassis (1906-1975). He was a maritime freight and tankers shipping tycoon. At one time he owned a fleet of seventy ocean vessels. All accomplished in 69 years.

Onassis youth started as a Christian Greek refugee who escaped with his parents the persecution by Moslem Turks in Smyrna. The family immigrated to Argentina on refugee passports. There Onassis went to school and eventually succeeded in business. He bought used ships. After World War II he observed the ruined shipyard in Hamburg Germany. He took on himself to rebuild the shipyard. That was a sagacious observation and undertaking. In the process he built his own fleet. By 1956 he owned a remarkable fleet of oil tankers.

Then in 1956/57 the Suez Canal was blocked by the Egyptian government and with that oil supplies from the Mideast to Europe and America came to a halt. Someone’s war is another one’s serendipitous opportunity. Onassis had his fleet of tankers ready to transport the oil (energy), around the Cape of Good Hope. Onassis amassed millions on a daily basis until the Suez Canal was reopened.

In 1953 Onassis started to buy real estate in the principality of Monaco. He noticed that the principality is desolate and has the potential to be attractive to the nobility and the wealthy crowd of Europe and America. A tax haven is always attractive as are casinos. A sagacious judgment. He was initially welcomed by Monaco’s ruler, Prince Rainier III as the tiny country required investment. Now Onassis became both rich and famous. In the course of events, a Hollywood star – Grace Kelley – married the Prince of Monaco and became a Royal Highness Princess. Business was even better.

Then Sir Winston Churchill, Britain’s famed politician, frequently sought the company of Onassis.

Next, Onassis acquired or rather rescued financially and operationally the Greek national Olympic Airlines. He became the proud owner of the airline.

Serendipity loves social company. While doing big business he attracted the era classiest opera soprano diva – Maria Callas. It was a mutually rewarding relationship.

Now Onassis had the imprimatur of Sir Winston, the British statesman suggested to him to meet a young US senator from New York – John F. Kennedy and his lovely wife – Jacqueline. That was a historical far-sighted social investment of his treasured time. As serendipity would have it Jacqueline became available due to tragic circumstances, a few years later. Now Onassis was married to a former First Lady of the U.S., and had a “Kennedy” cachet.

Onassis passed at age 69 from complications of myasthenia gravis. The Boeing 727 which transported Onassis’s remains to his private island was later purchased for US$100,000 by an American electrical engineer and turned into an unconventional private residence in Hillsboro, Oregon. Notoriety tags its price.

- The Serendipitous Mindset: Expectation as a Perceptual Lens.

At the core is a state of constant positive expectation, akin to a psychological attraction field that draws in and magnifies opportunities.

This is not naive optimism. It requires a prepared mind (as Louis Pasteur noted: “Chance favors the prepared mind”).

- Key Principles:

- Routine Phenomenon: Serendipity is reframed as commonplace occurances, not exceptional. The mindset assumes that “random happy accidents” unfold daily in the flow of any person’s regular life—delays, detours, or disruptions are reinterpreted as stepping stones to greater outcomes.

- Perceptual Filtering: The human brain’s reticular activating system (RAS) plays a role here. Expectation primes us to notice what aligns with our goals. Without this, many potential serendipitous events keep occuring but go unrecognized (e.g., overlooking a chance conversation that could lead to a career breakthrough).

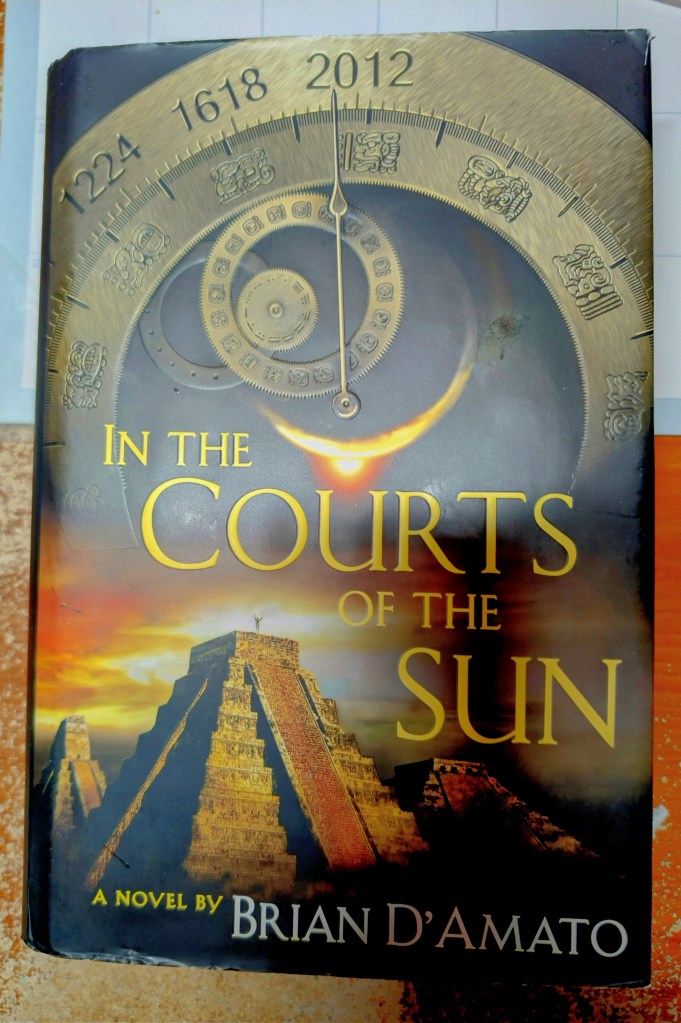

- Resilience to Apparent Setbacks: Every “delay” is a setup. A canceled flight might lead to a pivotal meeting in the airport lounge; a rejected idea sparks a superior iteration, a rejected manuscript becomes a global best-seller, (Chicken Soup For the Soul…).

Expansion: The serendipity mindset echoes concepts in positive psychology (e.g., Martin Seligman‘s learned optimism) and quantum-inspired philosophies (e.g., the observer effect in perception). In practice, it involves daily rituals: journaling “expected serendipities,” visualizing open-ended outcomes, or adopting mantras like “What hidden gift is this obstacle concealing?”

2. The Serendipitous Skillset: Three Interlocking Competencies of Skills transform the serendipity mindset into action, increasing the probability surface for encounters. These three are trainable aptitudes, not innate traits.

SOCIABILITY (The Social Network Expander):

The propensity to initiate, nurture, and collaborate with diverse people. Probability theory underpins this: the more nodes in your social graph, the higher the odds of combinatorial sparks.

- Mechanics: Weak ties (acquaintances) are goldmines as per Mark Granovetter‘s “strength of weak ties” theory: “It’s not what you know, but who you know.”— they are our bridges to disparate worlds.

- Practices: Attending unrelated events, using “connector questions” (e.g., “Who else should I know?”), maintain a diverse people contact ecosystem (online/offline, cross-industry) – a functional network of various partners, customers, and stakeholders who collaborate to maximize mutual benefit for success; as per Keith Ferrazzi – “Never Eat Alone”.

- Impact: A single chat on a cup of coffee might yield a co-founder an investor, or idea that alters business trajectories.

ADVENTURISM (The Exploration Engine):

A deliberate embrace of novelty, uncertainty, and the unknown. This counters natural entropy by injecting variability into routines.

Before we go any further let’s consider the life story of Charles Darwin. Darwin is known as the thinker and author who created the Theory of Evolution. He is recognized as one of the most influential persons in our world today. Actually he is ranked as number 16 on the list known as The 100 Influentuals. He got there as a result of his adventurous life in his younger years and his sagacity as a thinker who connected the dots in his later years.

On December 1831 (age 22), he sailed on board the HMS Beagle that sailed around the globe for almost five years. During this period he collected natural specimens of flora, fauna and inanimate geological samples from around the globe. He collected over 5,000 samples which he brought with him back home on October 2, 1836. Upon his return he was 27 years old. He settled with his family and examined his many samples and contemplated. The items he brought back from his adventurous journey were at the beginning just “dots”. Gradually Darwin connected these “dots” into a meaningful pattern; now familiar to us as the Theory of Evolution. Darwin had the intellectual powers and sagacity to construct the framework of evolution, origin of species and descent of man. Darwin was not looking for fame or fortune. Those two found Darwin. Charles Darwin was born into a wealthy family and fame pursued him – one of the most influential scientists that ever lived.

Let’s now move to the third skill of Sagacity and pattern recognition.

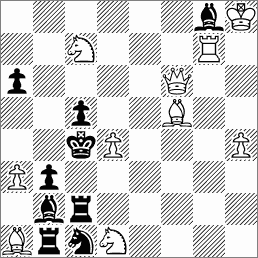

PATTERN CONNECTION (The Synthesis Forge):

Sagacity is a cognitive faculty to detect disparate “dots” (fragments of information, people, different ideas) and forge them into coherent, value-creating entities. This is bisociation, (Arthur Koestler’s term for linking unrelated matrices).

- Mechanics: Pattern recognition involves divergent thinking, analogy-making, and mental model flexibility. Tools used, like mind mapping, or the “connective inquiry” method (asking “How might this relate to X?”).

- Practices: Cultivating via meditation new insight (e.g., mindfulness to quiet noise), diverse reading (cross-pollinate fields), or “dot-collecting” journals.

- Impact: Steve Jobs connected calligraphy with computing aesthetics, birthing Apple’s typography edge.

Interplay of Skills: These skills form a virtuous cycle — sociability exposes dots => adventurism scatters them variably => pattern connection integrates them. Deficiencies in one diminish the whole (e.g., high sociability without connection yields superficial chats).

The ENABLING MILIEU: External Circumstances and Environment.

Beyond internal factors, the Serendipitome includes the individual person’s total ecosystem—physical, social, cultural, and temporal contexts that amplify encounters.

- Components:

- Physical Spaces: “Third places” (- cafes, co-working hubs, civic clubs conferences) designed for collisions (e.g., Pixar’s central atrium forces cross-department mingling).

- Digital Ecosystems: Algorithms on platforms like LinkedIn or X can serendipitously surface connections; curated feeds balance echo chambers with novelty.

- Temporal Windows: Life stages (e.g., post-graduation flux) or crises (pivots) heighten receptivity.

- Cultural Norms: Societies that value openness versus rigidity (e.g., Silicon Valley’s “fail fast” ethos).

Expansion: Modern tools enhance this—AI recommendation systems as “serendipity engines” (e.g., Spotify’s Discover Weekly), or urban design principles (Jane Jacobs’ “sidewalk ballet” for organic interactions). When at the airport (frequently as I do), you meet more strangers than you can handle; watch the colorful flow of the passengers at the terminal concourse.

Higher Consciousness: The Overarching State.

A “higher state of consciousness” integrates the framework—mindful presence, ego dissolution, and flow states (per Mihaly Csikszentmihalyi). This transcends ego-driven striving, allowing intuitive perception of synchronicities (Jung’s term for meaningful coincidences). Higher consciousness in this case is a “constant positive expectation” where every apparent delay or setback is reframed as a “steppingstone forward.”

- Cultivation: Practices like meditation, nature immersion, or psychedelics (in controlled contexts) heighten conscious awareness, reducing filters that block serendipity.

Applications and Empirical Support

- Personal Development: Build a personal “Serendipitome audit” —map your mindset gaps, skill proficiencies, and milieu richness.

- Organizational: Companies like Google allocate “20% time” for adventurism; 3M fosters sociability via cross-functional teams.

- Evidence: Studies (e.g., in Network Science) show diverse networks predict innovation; psychological research links openness-to-experience (Big Five trait) with serendipitous outcomes.

- Access AI and Agentic synthesis.

Potential Pitfalls and Balance.

Watch this: Over-reliance risks “serendipity chasing” burnout or ignoring deliberate planning.

Balance your activity with strategy: Serendipitome as 70% preparation, 30% openness.

In summary, the Serendipitome demystifies and eliminate “luck”; rendering it engineerable. By nurturing expectations, honing skills, and optimizing environments, individuals don’t wait for fortune—they design and engineer fortunes’ arrival.

As the Fourth Princes of Serendip exemplified – sagacity turns random events into life destiny.

SUBSCRIBE TO THIS BLOG. ITS FREE. Always will remain free.

© Mandy Lender 2026.

www.mandylender.com www.mandylender.net www.attractome.com www.visionofhabakkuk.com

Tags: #serendipity #serendipitome #engineeredserendipity #guerrillaserendipity #aristotleonassis #charlesdarwin #sagacity #serendipityskillset #serendipitymindset